Who this is for: Studio leads, previz/tech directors, research buyers who want a practical path from generated shots to interactive scenes—fast.

What you’ll get: Three minimal, end‑to‑end pipelines (video → depth/flow → mesh proxy → web‑playable) plus two drop‑in code samples and a live demo you can try in minutes. Each pipeline highlights where Rosebud is the glue (prompting, asset pass‑through, scene composition, web export).

TL;DR

- You don’t need a perfect 3D reconstruction to preview interactivity. A proxy mesh (a simplified placeholder 3D model, like a flat plane or rough blockout, used instead of a full detailed reconstruction) displaced by a depth map (or warped by flow) is often good enough to iterate on framing, timing, and collision.

- Rosebud sits in the middle: capture generation prompts, track shot metadata, package assets, and export a playable scene to the web (Three.js or Babylon.js) or back into your DCC.

- Start simple (single plane + depth). When you need accuracy, step up to flow‑guided warps or multi‑view splats/meshes.

What you’ll try in Rosebud

- Template: Video → Depth Mesh — paste a shot URL and a depth PNG; Rosebud auto‑wires a Three.js scene with orbit controls and a basic collider. Export to web.

- Template: Flow‑Warped Background Plate — import a shot and optical flow; Rosebud builds a time‑stable quad warp so background parallax matches camera motion.

- Template: Mesh Proxy + Video Texture — bring in a quick proxy (plane or rough photogrammetry), project the shot as a video texture, and block gameplay.

Pipeline 1 — Single‑Plane Depth Mesh (fastest)

Best for: animatics, framing tests, quick cutscene blocking.

Ingredients

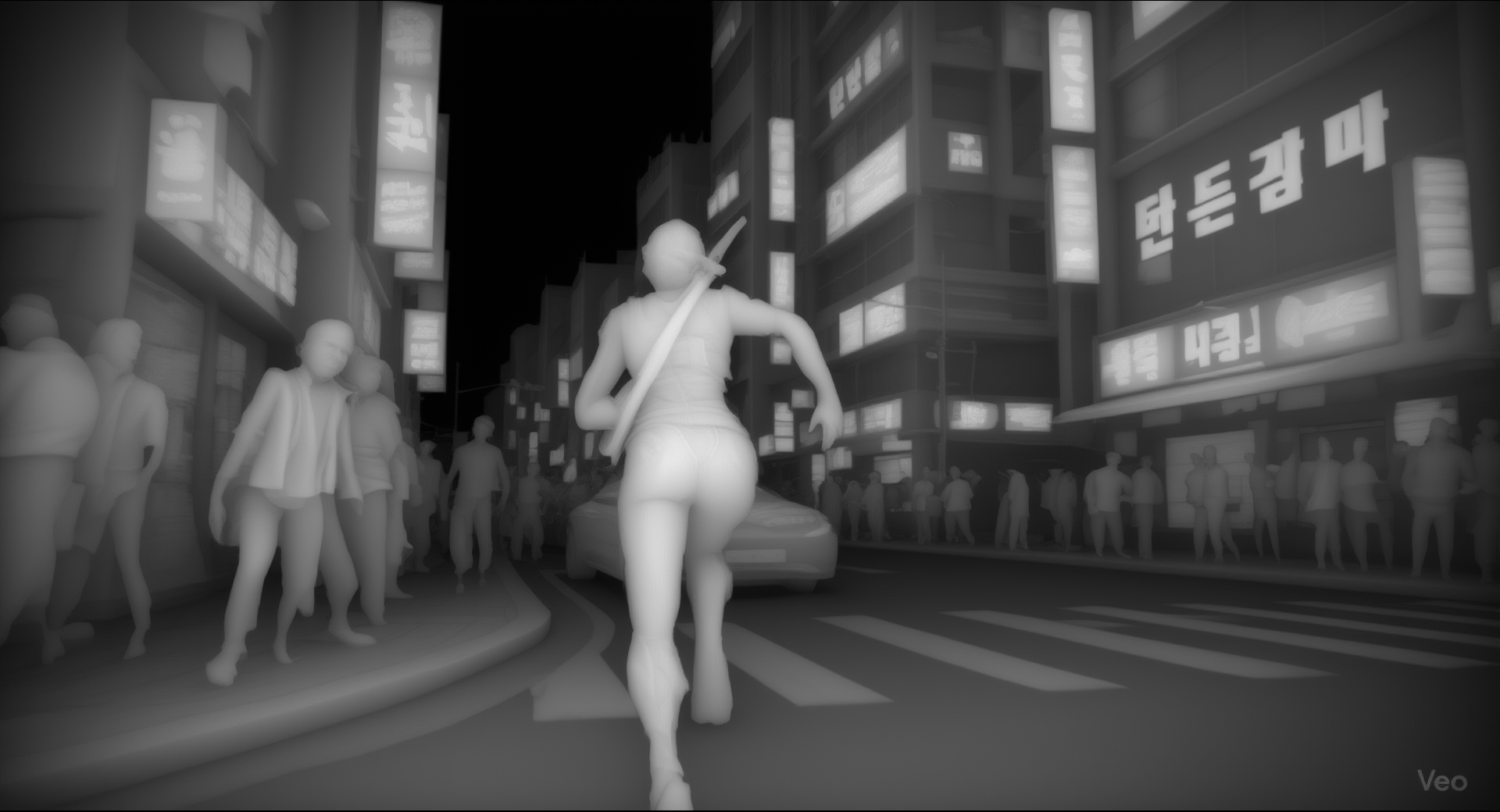

- Shot (MP4/WebM), e.g., a generated clip or a background plate. You can use video gen models like Runway Aleph/Gen 4, Genie 3, or Veo 3. Read our comparison of leading video models here.

- Depth map (single grayscale PNG matching the shot’s reference frame). We used SculptOK for the demo.

- Rosebud to package assets and export a Three.js scene.

- Shot planning. In Rosebud, pin the clip and set field‑of‑view, focal length, and intended gameplay camera limits.

- Depth. Generate or import a depth map for the key frame (Rosebud accepts PNG/TIFF). Optionally refine in‑editor. You can use tools like MiDaS (Intel ISL) or ZoeDepth.

- Proxy mesh. Start with a subdivided plane; displace vertices by depth (centered around mid‑gray). Project the video as a texture.

- Playable export. Rosebud exports a ready‑to‑host Three.js scene with orbit/gamepad controls and simple colliders.

Why it works

- It’s instant: no heavy reconstruction. You get convincing parallax and pacing for pitch/previs.

- Designers can tune depthScale, camera rails, and trigger zones in Rosebud without writing code.

Pipeline 2 — Flow‑Warped Plate (time‑stable parallax)

Best for: moving cameras / moving subjects where a single depth map flickers.

Ingredients

- Shot (sequence of frames).

- Optical flow between successive frames.

- Rosebud to package a flow warp node that stabilizes the plate over time.

Steps

- Shot planning. Mark hero objects that must stay stable across frames (characters, props).

- Flow. Import per‑frame optical flow (u,v). Rosebud stores flow alongside frames.

- Proxy warp. Drive a quad (or small grid) with accumulated flow to mimic camera motion and background parallax.

- Playable export. Rosebud emits a Three.js or WebGL shader that warps the plate per frame and exposes a time scrub and interaction hooks.

Why it works

- Minimizes “boiling” from depth flicker.

- Great for previs where the audience feels the space even if geometry is approximate.

Pipeline 3 — Mesh Proxy + Video Projection (most robust)

Best for: hero shots, blocking paths and collisions, or mixing with real 3D assets.

Ingredients

- Shot (MP4/WebM) + optional depth/flow for registration.

- Proxy mesh (plane → rough photogrammetry → cleaned retopo as needed).

- Rosebud to bind a Babylon.js scene with the video projected and gameplay controls.

Steps

- Shot planning. Lock key camera poses; set near/far planes and intended navigation bounds.

- Proxy. Import a quick mesh (from a splat/photogrammetry step or a handcrafted blockout). Rosebud aligns it to the shot.

- Projection. Project the video onto the mesh (UVs or world‑space project). Add colliders/pathing.

- Playable export. Use Rosebud’s web exporter to ship a Babylon.js scene with character controller and triggers.

Why it works

- Collisions and occlusion are predictable.

- You can gradually replace proxies with final assets without breaking shot timing.

Code Sample A — Three.js Depth‑Displaced Plane (video texture)

Use this when you have one video and one depth PNG for a reference frame. In simplest terms: the video gets pasted onto a flat 3D plane, and the depth map pushes parts of that plane forward or backward, so when you orbit the camera you see fake 3D parallax. Paste into the Rosebud code tab, or ask Rosie, our AI, to find the perfect home for it in your project. To dive deeper, you can learn more in the Three.js docs.

// Assumes three.js is loaded. Minimal setup omitted for brevity.

import * as THREE from 'three'

const video = document.createElement('video')

video.src = 'clip.mp4'

video.crossOrigin = 'anonymous'

video.loop = true

await video.play()

const videoTex = new THREE.VideoTexture(video)

const depthTex = await new THREE.TextureLoader().loadAsync('depth.png')

const geom = new THREE.PlaneGeometry(1.0, 1.0 * 9/16, 256, 256)

geom.rotateX(-Math.PI/2)

const material = new THREE.ShaderMaterial({

uniforms: {

uVideo: { value: videoTex },

uDepth: { value: depthTex },

uDepthScale: { value: 0.35 },

},

vertexShader: `

varying vec2 vUv; uniform sampler2D uDepth; uniform float uDepthScale;

void main(){ vUv = uv; float d = texture2D(uDepth, uv).r; vec3 displaced = position + normal * (d - 0.5) * uDepthScale; gl_Position = projectionMatrix * modelViewMatrix * vec4(displaced,1.0); }

`,

fragmentShader: `

varying vec2 vUv; uniform sampler2D uVideo; void main(){ gl_FragColor = texture2D(uVideo, vUv); }

`

})

const mesh = new THREE.Mesh(geom, material)

scene.add(mesh)

Rosebud tip: Bind uDepthScale to a UI slider in the editor so designers can tune parallax without code.

Code Sample B — Babylon.js Video Texture on a Proxy Mesh

When you have a rough mesh (plane → photogrammetry) and want stable collisions. You can also experiment in the Babylon.js Playground with Proxy Meshes and more.

import { Engine, Scene, ArcRotateCamera, HemisphericLight, MeshBuilder, VideoTexture, StandardMaterial } from '@babylonjs/core'

const engine = new Engine(canvas, true)

const scene = new Scene(engine)

const camera = new ArcRotateCamera('cam', Math.PI/2, Math.PI/3, 3, BABYLON.Vector3.Zero(), scene)

camera.attachControl(canvas, true)

new HemisphericLight('light', new BABYLON.Vector3(0,1,0), scene)

// Load or build a proxy mesh (replace with SceneLoader.ImportMesh for GLB)

const proxy = MeshBuilder.CreateGround('proxy', { width: 4, height: 2.25, subdivisions: 64 }, scene)

// Project the shot as a video texture

const mat = new StandardMaterial('mat', scene)

const vtex = new VideoTexture('vid', ['clip.mp4'], scene, true, true)

mat.diffuseTexture = vtex

mat.emissiveTexture = vtex // keep it bright for previs

proxy.material = mat

engine.runRenderLoop(()=> scene.render())

Rosebud tip: Add Rosebud’s first‑person controller block and set the proxy as a collider to walk the shot.

“What to try in Rosebud” blocks

- Prompt (cutscene plate): “Wide dolly‑in on neon alley at night; slow rain; soft rim‑light on hero.” → Generate 4 takes at 4–6s.

- Export: video/mp4 + pick 1 keyframe → generate/import a depth PNG.

- Compose: drop into Depth Mesh template and tune

depthScale = 0.25–0.45.

- Prompt (b‑roll plate): “Drone pass over desert highway; heat haze; golden hour.” → Import optical flow.

- Compose: use Flow‑Warped Plate (e.g., a drone shot stabilized with optical flow so the horizon feels solid instead of wobbling) to stabilize distant parallax.

- Prompt (hero shot): “Interior cathedral, orbit around statue; lens flare; 35mm.”

- Export: quick photogrammetry proxy (or blockout in Rosebud), then project the shot as a Babylon video texture.

Exporting & Linking

- Web export: One‑click from Rosebud → zipped Three.js/Babylon.js project with

index.html, and asset folder. - DCC handoff: Export glTF (proxy), MP4/WebM (plate), PNG/TIFF (depth), NPZ/EXR (flow). Rosebud maintains a manifest so revisions stay in sync.

Ready to start creating? Head to Rosebud!